What videos do we use for our tuning?

Everyone knows the importance of choosing good videos to evaluate video processing algorithms. There are many open databases to do this, however sometimes researchers create their own set of self-produced videos. This approach is appropriate when you need raw (uncompressed) videos to be analysed. But such content is usually captured with nonprofessional cameras and despite videos include various scenes made in different places, they all have the same noise distribution, effects (if used) and other features, which makes them not representative. Regarding to open databases, most of them consists of just several videos or the videos are outdated because they were produced with old cameras or digitised from tapes.

Which videos are appropriate for video compression tests?

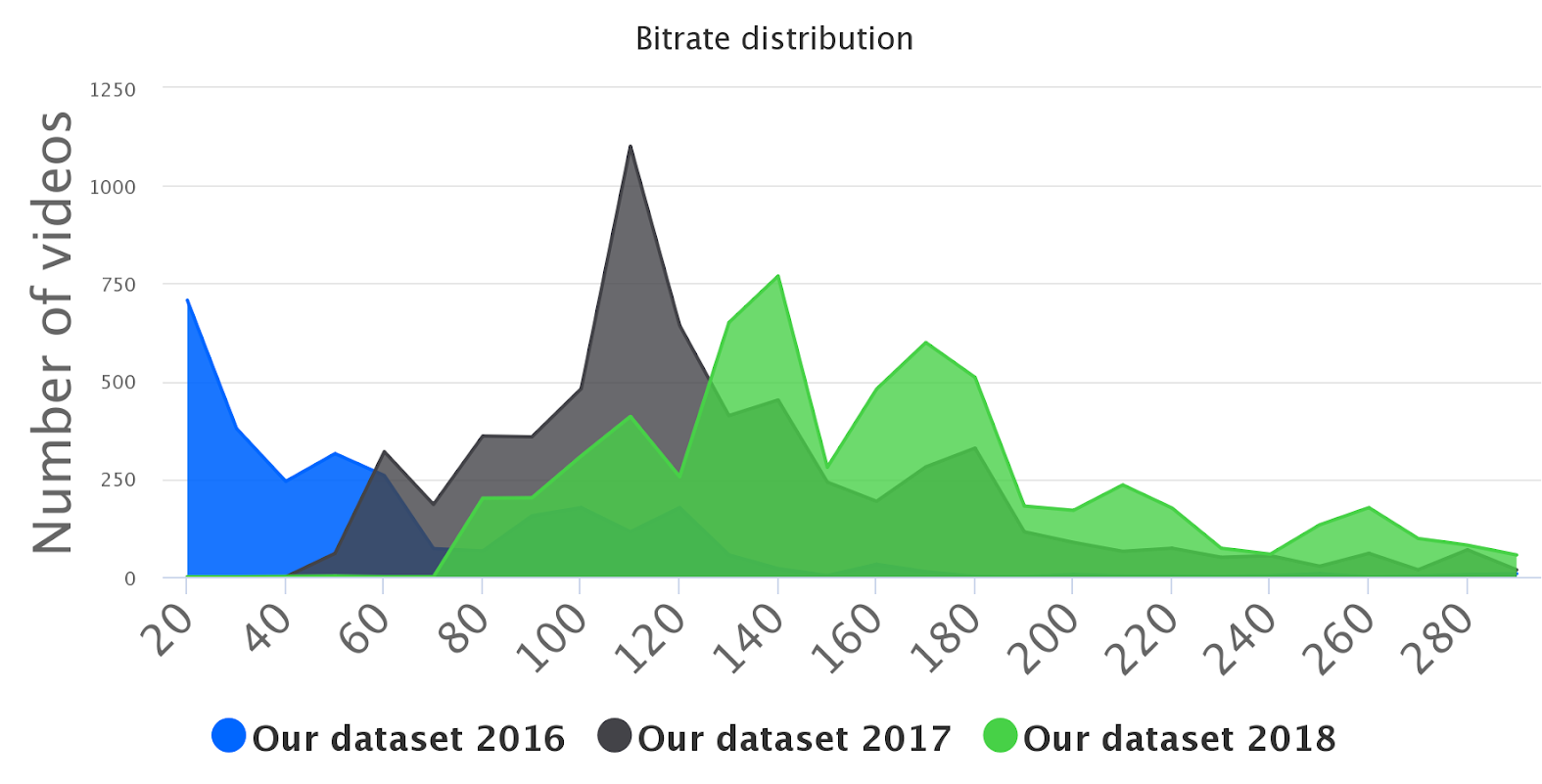

We developed a technique for creating our own data set with representative video sequences that encoders face in everyday situations. We don’t produce videos ourselves, we use open videos to keep their variety. First, we find video sequences produced by real users that are uploaded to video hostings and available for downloading. For now we have already analyzed more then 1,080,000 millions of videos looking for ones with high bitrate and resolution. For these videos having a high bitrate is especially important since we use them to evaluate video compression algorithms. Compression requires excess of information in video to receive a reasonable result, so if video was already compressed with low bitrate re-compression could be useless for performance analysis. That is why we permanently improve our technique to find and use videos with high bitrate. Picture 1 shows bitrate distribution for videos from our set by year. It shows the growth of videos bitrate in our set, which makes them more reasonable to be used in codecs performance evaluation and analysis.

Picture 1. Bitrate distribution of videos in our set by year.

Thus, our set consists of videos with average 167.75 Mbps bitrate at average and median value is 130.90 Mbps, which is enough to make compression tests. 52.69% of all videos are in ProRes — a high quality (although still lossy) video compression format. This properties are increasing with new videos in our collection:

| Mean bitrate | Median Bitrate | ProRes format | |

|---|---|---|---|

| Our set in 2016 | 71.60 Mbps | 49.11 Mbps | 3.21% |

| New videos 2017 | 153.80 Mbps | 119.06 Mbps | 53.31% |

| New videos 2018 | 190.43 Mbps | 160.80 Mbps | 73.93% |

| All | 167.75 Mbps | 130.90 Mbps | 52.69% |

Table 1. General information about bitrate of videos in our collection

For our tests we use videos with CC BY, CC BY ND, and Public Domain license which allows to perform any actions including commercial studies.

How many videos do we have to choose from?

User videos usually consist of various scenes with different content, for example vlogs and TV shows contain scenes in different locations. We mostly cut long videos into several parts to make our set more diverse and representative, but sometimes performs tests on full versions. Cutting is made and at scene changes to samples with an approximate length of 1,000 frames. We don’t use videos with resolution lower that FullHD. Videos in 4K are sometimes used for special tests on high-resolution compression, but in other cases we resize and crop them the to FullHD resolution in order to avoid compression artifacts. Picture 2 represents ours set size by year. Thus, our sample database for this year consisted of 15,833 items.

| Year | # FullHD videos | # FullHD samples | # 4K videos | # 4K samples | Total # of videos | Total # of samples |

|---|---|---|---|---|---|---|

| 2016 | 3 | 7 | 882 | 2902 | 885 | 2909 |

| 2017 | 1996 | 4738 | 1544 | 4561 | 3540 | 9299 |

| 2018 | 4342 | 10330 | 1946 | 5503 | 6288 | 15833 |

Table 2. Number of videos and samples in our database by year.

How to distinguish videos for making a representative set?

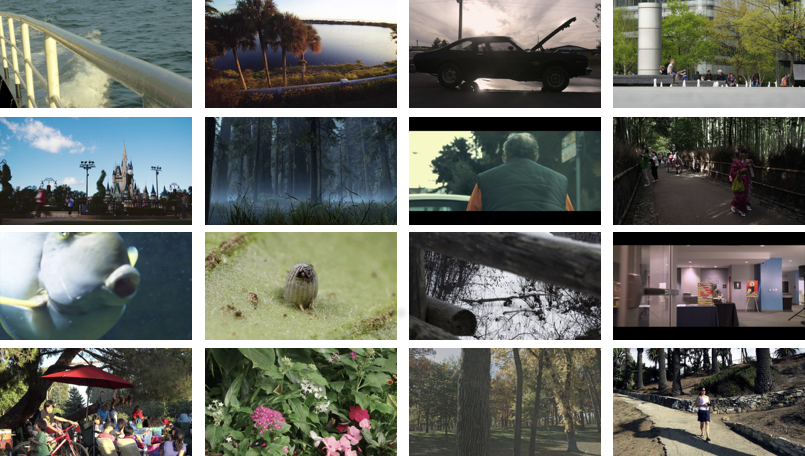

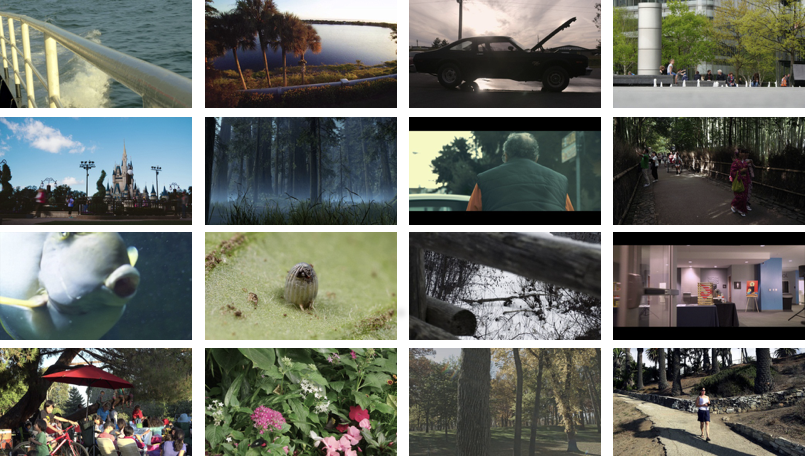

Obtained video set contains different videos: indoor and outdoor scenes, macro shooting, drones, videos with CGI effects, noisy videos etc. (see examples on Picture 2).

Picture 2. Examples from our video set.

From codecs point of view, videos are different in their temporal and spatial complexity. Low complexity allows to compress more information, and high complexity requires to save more information about frames and blocks. To evaluate spatial and temporal complexity, we encoded all samples using x264 with a constant quantization parameter (QP). We calculated the temporal and spatial complexity for each scene, defining spatial complexity as the average size of the I-frame normalized to the sample’s uncompressed frame size. Temporal complexity in our definition is the average size of the P-frame divided by average size of I-frame. This approach was offered by Google developers in 1.

We slightly changed the temporal and spatial complexity calculation process by adding an additional preprocessing step. We use source videos that was uploaded by users, so they all have different chroma subsampling which affects the results of videos evaluated complexity. Therefore to unificate the spatial and temporal complexity results of analysed videos, they all were converted to YUV 4:2:0 chroma subsample.

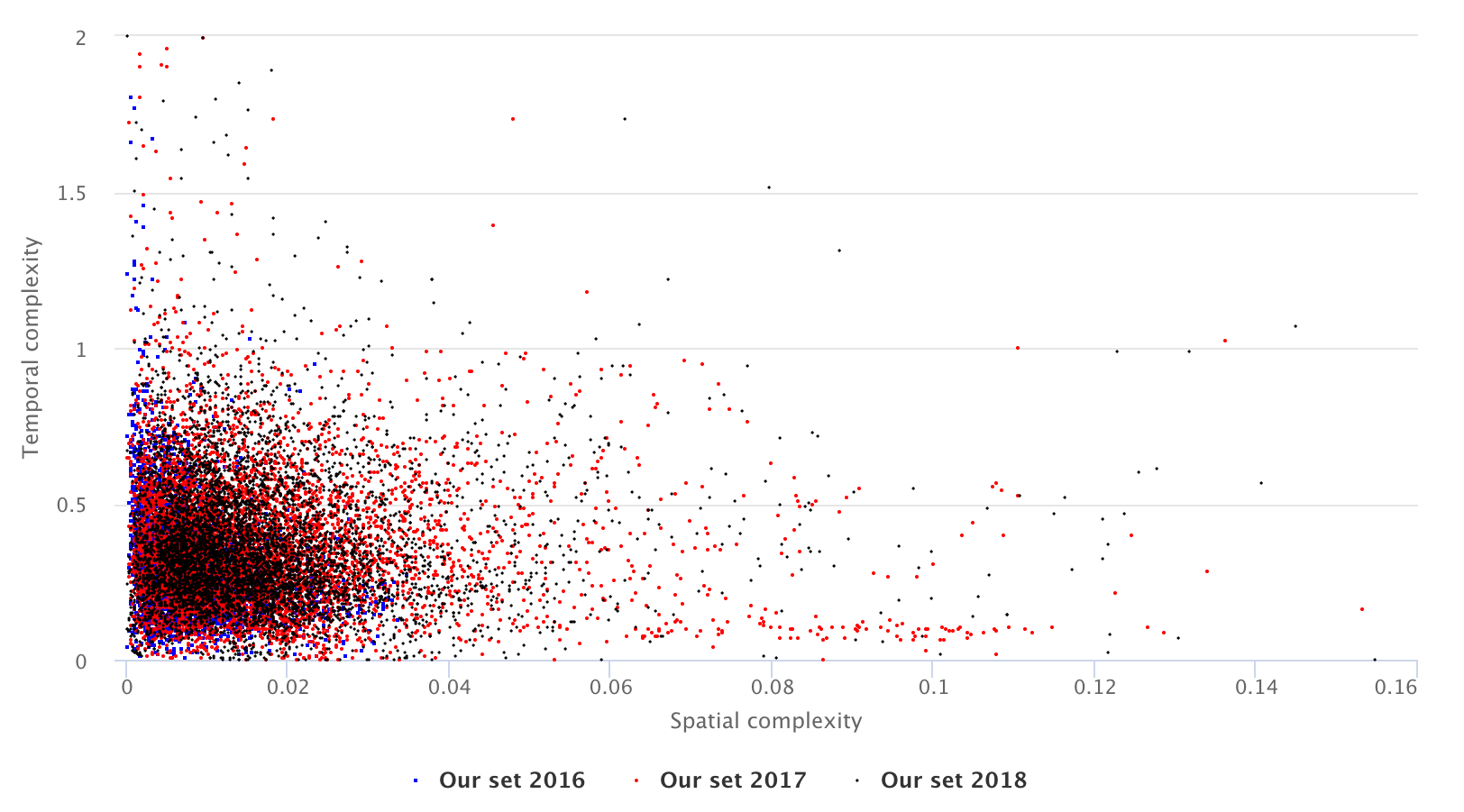

On Picture 3 we show the result of calculating Spatio-Temporal complexity of videos in our set.

Picture 3. Spatio-Temporal complexity of videos in our set. Colors represent a year when we added a video to the set.

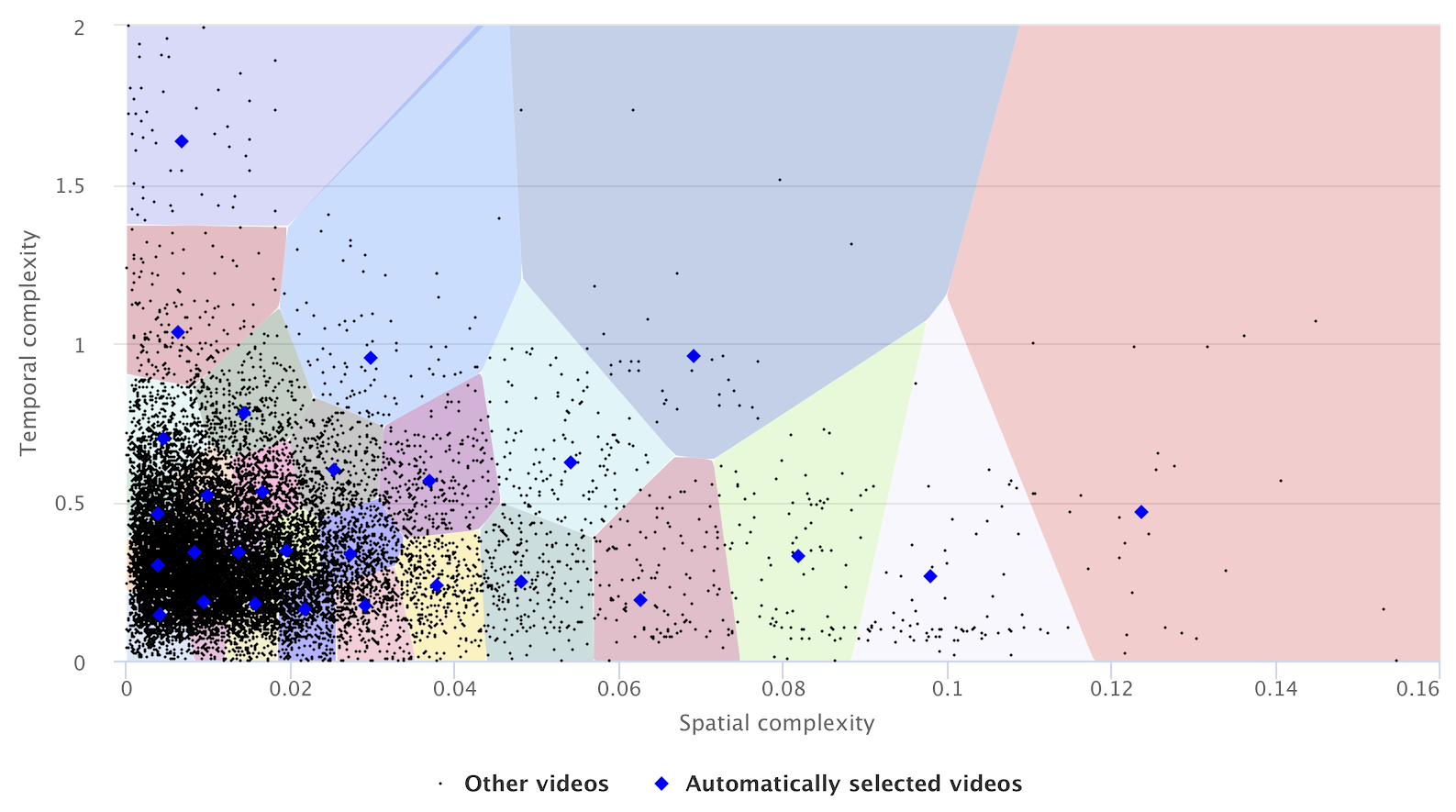

Now, making a video set for testing video processing and compression algorithms, we need to limit number of test videos and to choose them in the way to cover the whole space evenly. We used the following process to prepare the data set. First, suppose N to be a number of test videos we want to use, then our video set was divided into N clusters. For each cluster we selected the video sequence that’s closest to the cluster’s center and that has a license enabling derivatives and commercial use.

Example on real case: MSU Codecs Comparison 2018

The described approach was used to choose videos for the last MSU Codecs Comparison (link). For this comparison we had to choose N=28 videos. Picture 4 shows the cluster boundaries and constituent sequences.

Picture 4. Clustering and automatic selection from our test set for target number of N=28 sequences.

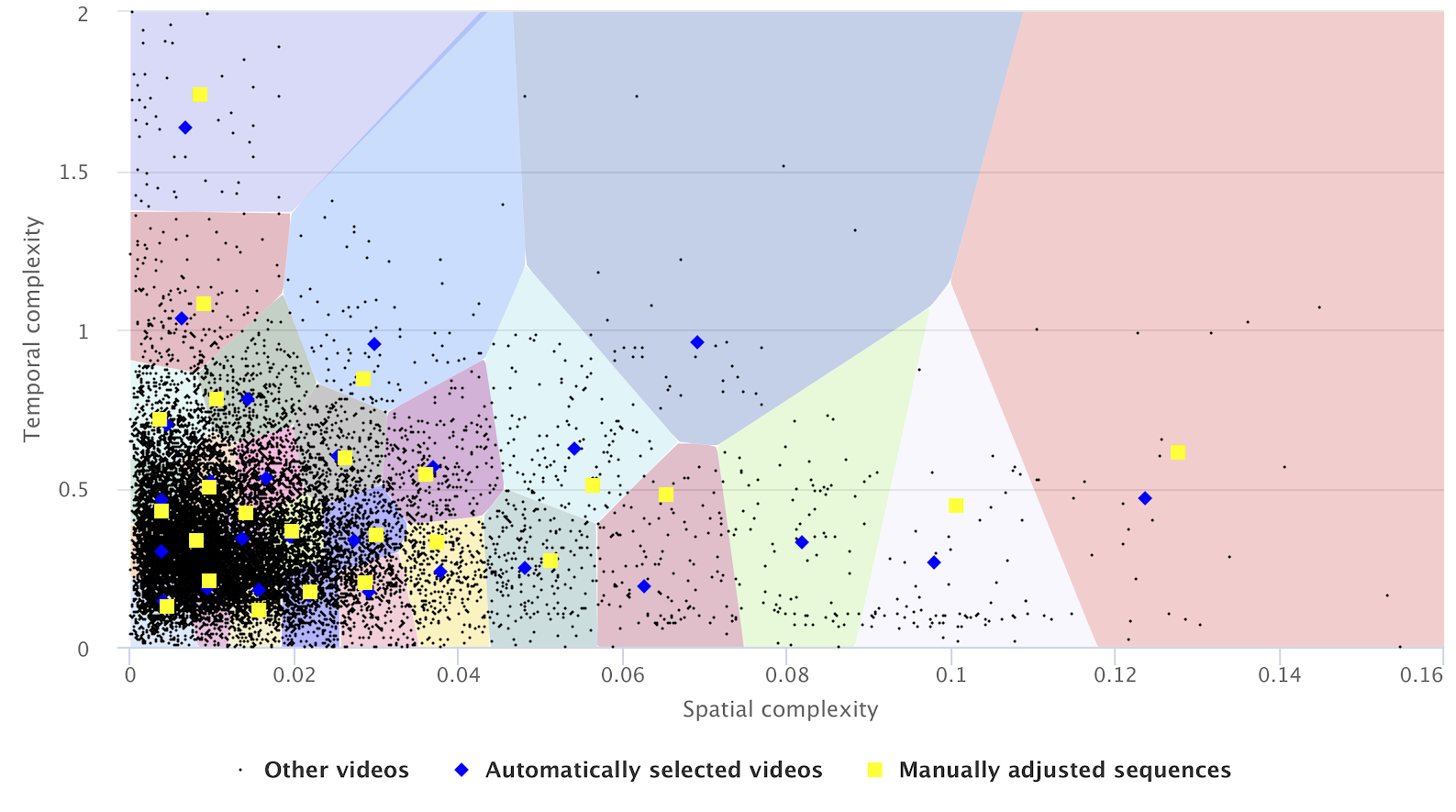

This set could be the final, unless Codecs Comparison have several rules which requires the use of several videos from previous year comparison and from open free video collections. To satisfy the condition, we increased weights for videos from previous comparison and for videos from open set media.xiph.org. Some automatically chosen samples contain company names or have other copyright issues, so we removed them from their respective clusters and replaced them with other samples having a suitable license. Picture 5 illustrates these adjustments.

The average bitrate for all sequences in the final set is 449.72 Mbps, median — 192.02 Mbps. “Hera” (90 Mbps), “Television studio” (92 Mbps) and “Foggy beach” (93 Mbps) sequences have minimal bitrates.

Picture 5. Final test video set for MSU Codecs Comparison 2018 (yellow dots) in comparison with automatic selected videos.

Comparison with media.xiph.org video collection

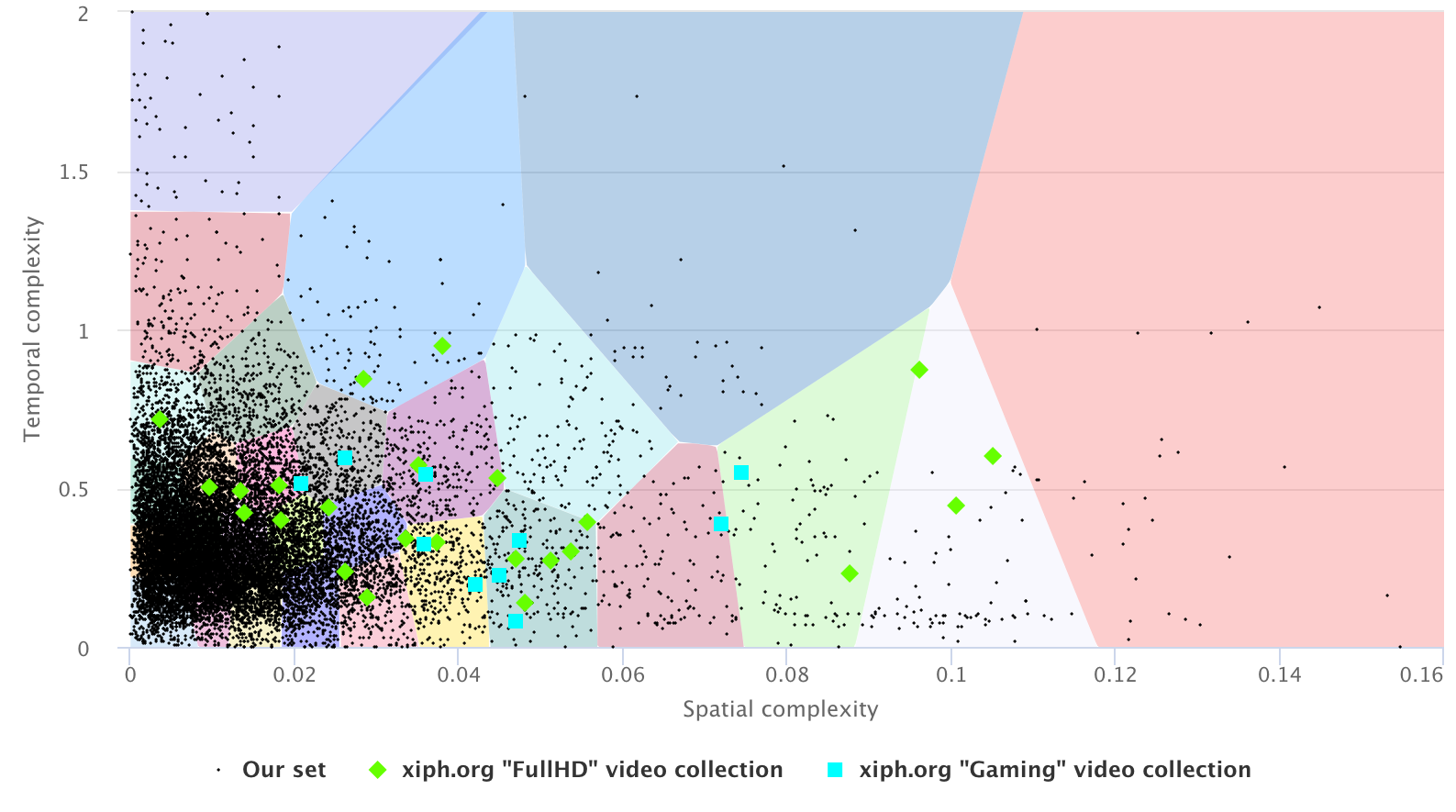

On Picture 3 we see that most of real user videos don’t have high complexity. This distribution can give us a knowledge about the priority of encoding performance in different cases. For example, one codec may be tuned for well performance on simple cases and unexpectable result on complicated videos, and the other codec may show stable but middle quality in all cases. As a result, the first one would show better result more often due to high spread of simple videos in the internet.

With this knowledge, we decided to analyse the distribution of videos from well-known and widely used collection from media.xiph.org website. The result is presented on Picture 6. It shows that most of the videos from xiph.org video set have high spatial and temporal complexity with which codecs rarely face in everyday life.

Picture 6. Area with the most frequent Spatial/Temporal complexity ratio is not covered by xiph videos.

Conclusion: what’s next? Plans & suggestions

Video compression and processing techniques are developing rapidly. New approaches often are focused on a specific problem and require special testing sets. So as user videos: we observe a growth of number of videos and appearing of new video classes like shooting from drones and action cameras. We are improving our technique to create more representative video sets, and now we have some issues to do this: Developing of new features to distinguish videos and make high-dimensional clustering We use deep learning to classify videos by content We are also implementing new physical features that could divide and classify videos by many parameters Weighting of performance results Knowledge about videos distribution allows to account algorithm performance result in according to video “ordinarity” Testing on representation of other open video collections We received a request for testing MPEG video set Checking of LIVE Mobile Stall Video Database

Thank you for reading, and use good video sets to evaluate your algorithms!

-

C. Chen et. al., “A Subjective Study for the Design of Multi-resolution ABR Video Streams with the VP9 Codec,” 2016. ↩